Memories as Data: Deep Neural Network Learning

A Joint Artificial Cognitive Declarative Memory Storage Map and Episodic Recall Promoter Methodology to Dynamically Store, Retrieve, and Recall Deep Neural Network Learning Data Derived from Aggregate Student Learning

Rachel Naidich and Matthew Trang

Thomas Jefferson High School for Science and Technology

This paper was originally included in the 2019 print publication of the Teknos Science Journal.

Abstract

The Omega Learning Map is a unique approach to storing and retrieving massive student learning datasets within an artificial cognitive declarative memory model. This new memory storage model is based on the human declarative memory model and is useful for storage and retrieval of the immense volume of student data. This data is derived from utilizing multiple interleaved deep machine-learning artificial intelligence models to parse, tag, and index academic, communicative, and social student data cohorts that are available for capture in an Aggregate Student Learning Environment. In addition, the declarative memory model is designed to include artificial Episodic Recall Promoters stored in long-term and universal artificial cognitive memory modules to assist students with recall and knowledge acquisition.

Introduction

Just as “Big Data” datasets allow mapping and analysis of human genomes to find ways to effectively combat disease and improve health, it is now possible to use big datasets to help students find their best learning state and academic environment, identify intervention methodologies and support mechanisms, and map a road to improved student learning. With the rise of online learning, new software platforms have been developed to collect student learning data. Research on techniques for analyzing data collected from students and assessment approaches has contributed to the study of student retention rates and the efficacy of varying remediation methods. However, the results have been mixed when applied to understanding the multifaceted causes behind varying student attrition and retention rates, completion metrics, and overall student learning attainment.

Some argue that it is not the research methodologies that are hindering the ability to find pathways to online students’ academic success, but the study-limited sources of online platform data used. Some parts of the Aggregate Student Learning (ASL) experience are neglected, such as how a student’s personal, professional, and other external experiences may affect their academic output. Variables like financial condition, romantic relationships, personality type, mood, and political beliefs could significantly impact academic achievement and could be analyzed alongside traditional achievement metrics using new algorithms and newly trained machine learning models. As Chris Dede and Andrew Ho mentioned in their recent article in Educause Review, “We believe that what is happening with data-intensive research and analysis today is comparable to the inventions of the microscope and telescope. Both of these devices revealed new types of data that had always been present but never before accessible” (2016). The ASL datasets have never been accessible, qualified, nor collated within an academic experience before the beginning of online education and digital data learning sciences and engineering.

The goal of this study is to consider the current research on student learning and factors that influence academic achievement to design a new theoretical machine learning model based on human memory models to store and retrieve large ASL data sets. It would parse, tag, and index all important information relating to academic achievement. By basing the model on human memory, it would contain the detail and complexity necessary to accurately store and record information for every student. Using this immense amount of data, this invention may increase academic achievement and revolutionize the learning experiences of online students by placing them in the best possible learning environment, promoting recall of information and providing services such as academic advising, social suggestions, and counseling.

Methods

ASL is a contemporary revision to the definition of Whole Student Learning, which is generally understood in post-secondary education to be the inclusion of support from the offices of Student Affairs, Student Counseling, and Student Life in a student’s overall learning plan [23]. ASL can be defined as a unified consideration, analysis, and assessment of academic subject data and non-subject socially shared data points in measuring student achievement within a student’s overall academic rubric.

Deep Academic Learning Intelligence (DALI) System and Interfaces [20] is a deep neural language network (DNLN) matrix x matrix (M x M) machine-learning (ML) artificial intelligence model that provides the student academic advising, personal counseling, and individual mentoring available in an ASL Environment. Datasets include academic performance history, subject-based and non-subject based communication content understanding, and social and interpersonal behavioral analysis. Over time, DALI will “learn” about each student’s evolving external environment, condition, state, and situation as they impact or may impact student academic performance within an online learning platform. Upon detecting a potential issue, DALI will make appropriate corrective suggestions and recommendations to the student to remediate and modify potential negative outcomes. The student trains their DALI DNLN ML model by reporting whether the suggestion or recommendation was followed and any responses to it. Within an online learning platform, every student’s initial grouping data, dynamic regrouping, DALI recommendation history, ERP recall, and results are stored in each student’s Omega Learning Map (ΩLM).

The ΩLM is a unique approach to storing and retrieving student learning data within an artificial cognitive declarative memory model. The new memory model is required for useful storage and retrieval of the immense student data derived from utilizing machine learning natural language analysis and processing models to parse, tag, and index data available in dynamic regrouping student communications channels, and DALI suggestions and responses, parsed using multiple interleaved machine-learning artificial intelligence models that are employed in an ASL environment. In addition, the declarative memory model is designed to include artificial Episodic Recall Promoters (ERP), stored in long-term and universal memory modules, to assist students with recall of academic subject matter as they relate to advanced knowledge acquisition.

Depending on student responses to the recommendations provided by DALI, and the resultant decline or improvement in academic performance, each student’s ΩLM will adapt and evolve, allowing DALI to “learn” more about the value of each suggestion to provide more relevant recommendations. The Knowledge Acquisition System (KAS) is an expanded and refined version of the original after-image, primary, and secondary memory model first proposed by William James in 1890 [21]. The inventors transpose James’s after-image memory model into a Sensory Memory Module that contains both current and historical learners’ data defined as semantic inputs, and the channels (text, spoken, visual) of the learner’s experiences around the acquisition of the semantic inputs as episodic inputs. The inventors also divide James’s primary memory model into Working and Short-Term Memory Modules. Further, James’s secondary model in the invention is now a unique Long-Term Memory Module that contains a learner’s Declarative Memory including Sensory and Episodic Memory inputs, as well as a Universal Memory Bank. These simulated cognitive software structures are needed to correctly decipher, identify, tag, index and store all the student data provided and available within an online learning platform, and to retrieve and transmit the data back to the learner within the context of a relevant academic experience. Outlined in Fig. 1, the unique Knowledge Acquisition System (KAS) is a mathematical construct to decipher, store, and transfer ASL DALI (DNLN) parsed, tagged and indexed data that creates and informs a student’s ΩLM. The goal of the KAS is to accurately record and store information, and is divided into four major variable groups, each representative of a human memory model including Sensory Memory (v), Working Memory (w), Short Term Memory (m), Long Term Memory (l). Another unique feature of the KAS invention is the Universal Memory Bank (j) (UMB). The UMB tags and indexes student parsed data from an integrated cohort vector experience, which is the sum of the DALI Suggestions responses, and the follow-up Helpful responses that may represent potential universal conditions that another student may experience in the future.

Results

Sensory Memory (v) Module

Sensory Memory is defined as the ability to retain neuropsychological impressions of sensory information after the offset of the initial stimuli [8]. Sensory Memory includes both sensory storage and perceptual memory. Sensory storage accounts for the initial maintenance of detected stimuli, and perceptual memory is the outcome of processing the sensory storage [19]. In the context of the KAS, the Sensory Memory also serves to recognize stimuli derived from various sources, including, but not limited to, academic performance, internal academics-related communication factors, external non-academic-related extenuating circumstances, and behavioral analyses. These Sensory Memory inputs are divided into two categories, Semantic and Episodic inputs. Semantic inputs comprise all data regarding general information about the student, such as Traditional Achievement, Non-Traditional Achievement, Foundational Data, Parsed Student Subject and Non-Subject Communication channel data, and historical data from an online learning platform experience. Episodic inputs are comprised of all data regarding an individual's personal event experiences, such as information parsed, tagged, and indexed from the communication channels, from the instructor-students and student-instructor exchanges, as well as social subject and non-subject texts and Audio/Visual channels. Figure 2 outlines a block diagram of the Sensory Memory Module in the KAS.

Working Memory in the human frontal cortex serves as a limited storage system for temporary recall and manipulation of information. Working Memory is represented in the Baddeley and Hitch model as the storage of a limited amount of information within two neural loops: the phonological loop for verbal material, and the visuospatial sketchpad for visuospatial material [2]. The Working Memory data stored can only last until new data arrives to take its place. Old data in the queue will either be moved into Short-Term Memory or be forgotten. Figure 3 outlines the KAS Working Memory Module.

Wj = the probability that a dataset in W1 is lost when a new dataset arrives in W2, (or the inversion). Therefore, W1 + W2 + Wn... = 1, since every time a new dataset enters the working memory module within > 30s timeframe, the previous dataset is pushed to the Short-Term Memory or transferred to the Long-Term Memory (LTM) module or forgotten. All inputs from the Sensory Memory are sent to the Working Memory Module, which performs as temporary storage and as an Information Classifier similar to the central executive described by Baddeley and Hitch (1974), as it directs information through the Working Memory system’s information classification loops (neural loops), then retrieves and directs the classified information to its next respective destination as seen in Figure 4. The Working Memory identifies the input as W1 (text), W2 (audio/visual), or W3 (communication/social), channels the tagged data with relevant identifiers, and determines if the parsed data can be categorized in the fields of Traditional Achievement, Communication, and Social (Fig. 3). After being classified, the semantic and episodic inputs are separated, with all semantic information designated as important. Semantic inputs are distributed directly into the LTM Module, and Episodic inputs are sent to the Short-Term Memory Module.

Let W1 (or W2 or W3) represent a vector = (W1a, W1b, W1c,... W1n) a-n representing the sub-variables representing data sets outlined in Fig. 4. When Bayes’ Theorem [27] is applied, the probability to classify sub-variable datasets in W1 is:

Where k is the possible outcomes of classification and C is the sub-variable group. Utilizing logistic regression to classify and predict our sub-variables classes or datasets is:

Using multinomial logistic regression and applying the Softmax Function [5] used in DALI to the final layer of the DNLN we have:

Short-Term Memory (m) Module

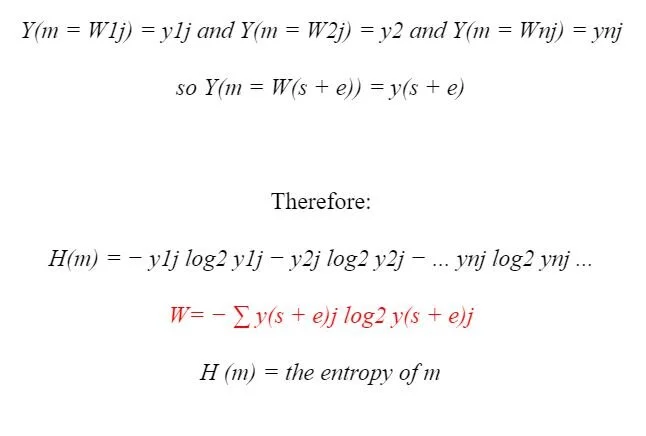

The Short-Term Memory (STM) serves as a filter that allows the Knowledge Acquisition System (KAS) to either store data for a short period of time or delete data by calculating the entropy of the data. The KAS STM module is modeled on characteristics of the human memory prefrontal cortex, so it tends to store STM with high emotional value and is more likely to store negative information (m1) as opposed to positive (m2), or neutral information (mø) [17]. Figure 5 outlines The KAS STM Module. First, if the input data matches any of the three data fields of W1j, W2j, or W3j, the material is relevant and is categorized as important (high entropy). If not, the module conducts a machine-learning Sentiment Analysis using trained models with 200,000 phrases resulting in 12,000 parsed sentence, and weights the datasets with high sentiment as important and low sentiment as unimportant. Finally, if the dataset has been deemed unimportant, the model performs another analysis using machine-learning Emotional Content Analysis models already trained with 25,000 previously tagged phrases resulting in 2000 parsed sentences and tags datasets with higher emotion with larger weights. Datasets with high sentiment or emotion are considered relevant because they provide emotional context and may reflect students’ underlying motivations [6]. The STM module uses a modified version of the Shannon Entropy Equations [24] and categorizes any data that does not pass these three filters as low entropy and deletes it from storage. The system then categorizes all high entropy data as either subject or non-subject matter and passes it to Long-Term Memory (LTM) where it is stored in the student’s ΩLM. Fig 6. describes the entropy filtering process.

Dataset input = m, and m can only have one of (s) or (e) values of W1j, W2j, W3j, Wnj..., W(s+e). Y is a function that returns a high value if m falls under the associated W classification, and returns a low value otherwise. The magnitude of the values are determined by communication with the Procedural Memory Module, as discussed later in the Procedural Memory section.

High Entropy means m is determined to be high (sentiment and emotionally positive) or low (sentiment and emotionally negative). And m data that is insignificant would result in a quasi-steady-state mathematically, and become forgotten (deleted).

Long-Term Memory (l) Module

The Long-Term Memory (LTM) Module represents the semi-permanent memory humans depend upon (>60s) to ride a bicycle (procedural memory), or remember a song (declarative memory). Due to the immense challenge in trying to simulate a software-based model of human LTM for the purposes of storing and retrieving a student’s learning data and associated learning experiences, a version of the Object-Attribute-Relation Model of LTM that defines finite datasets was used. However, Relation replaced Association as defined:

Object: The perception of an external entity and the internal concept of that perception; Attribute: a sub-variable in the invention that is used to define the properties of an object; Association1: a relationship between a pair or pairs of object-objects, Object-Attributes, or attributes- attributes.

Therefore, OAA1 = (O, A, A1) where O is defined as a finite set of objects equal to a sub-variable within a dataset. A is a finite set of attributes equal to a dataset that portrays or illustrates an object. A1 is a finite set of associations between an object and other objects and associations between them.

The structure of the ΩLM simulates human Declarative Memory which is comprised of both episodic and semantic memory, and possesses the ability of conscious recollection. The semantic inputs are stored in the Semantic Memory component of the Declarative Memory module while the episodic Inputs from the Short-Term Memory (STM) are stored in the Episodic Memory component of the Declarative Memory as Memory Cells. Episodic Memory cells are classified by various properties, known as Patterns of Activation, Visual/Textual Images, Sensory/Conceptual Inputs, Time Period, and Autobiographical Perspective [9], and two key innovations: Multidimensional Dynamic Storing and Rapid Forgetting. An episodic memory cell can be defined as an element of a block within a hidden layer in a machine-learning deep neural network (DNN) model. Each block can contain thousands of memory cells used to train DALI about a user’s experiences associated with a learning event (see Object-Attribute-Association1 Model above). Each Episodic Memory cell also contains a filter that manages error flow to the cell and manages conflicts in dynamic weight distribution. Figure 7 outlines a diagram demonstrating memory cell input and output data, dynamic input and output weights, and a filtering system to manage weight conflicts and error flow.

Figure 8. DALI’s Declarative Episodic Memory Blocks and Cells Structure The ΩLM uses these cell properties similarly by associating semantic memory experiences within an episodic memory rubric that includes timeframe, patterns of activation, perspective, sound, color, and text that occur within the context of singular learning experience in an attempt to replicate the human LTM capture, storage, identification and retrieval process.

Procedural Memory

Another component of the LTM is the Procedural Memory, which acquires and stores motor skills and habits in the human brain [10]. The Procedural Memory in the ΩLM contains the rules for storage for the Working Memory and STM. Within the Working Memory, the Procedural Memory dictates what type of categorizations are made and the depth of categorization needed. In the STM, the Procedural Memory changes the weights used to calculate how data is defined as low entropy and/or should be forgotten. By communicating with DALI and a student’s ΩLM, the Procedural Memory informs whether there is a lack of usable information within the student’s ΩLM, and then transfers this information to the Short-Term Memory to lessen the restrictions of the entropy filter within that module. In short, the Procedural Memory Module can be described as an advisor to the Working and STM Modules in order to allow for greater flexibility in data storage. Figure 9 is a block Diagram of the Procedural Memory Module.

The Universal Memory Bank tags and indexes student parsed data from an integrated cohort vector experience, which is the sum of DALI’s Suggestions and Recommendations responses, and the follow-up Helpful responses that may represent potential universal conditions that another student may experience in the future. DALI will also store the sum of each tagged cohort vector experience, whether the suggestions and Helpful solicitation was successful or a failure, outside any student’s ΩLM, decoupled from any student’s silhouette within a generic Long-Term Memory (LTM) schemata. If a tagged cohort vector experience is recognized as similar as another student’s conditional experience, DALI will only provide previously successful recommendations to help ameliorate the issue or conflict, thereby using other students’ data to solve a different student’s similar issue. Figure 10 describes the function and process of the Universal Memory Bank (UMB).

Long-Term Memory Episodic Recall Promoter Apparatus (ERP) Episodic memories consist of multiple sensory data that has been processed and associated together to allow humans to recall events. Memories consist of many interrelated components that represent experiences and information that are stored in the human brain, and studies show that all the related components of one experience can be recollected when one is given as an associated ERP [16]. For the purposes of this invention, the definition of an ERP is an artificial episodic memory apparatus that tempts and attracts a learner into recalling LTM information through multisensory associative exposure using stimuli associated with a learner’s declarative memory experience. The Cognitive ERP apparatus integrates with the ΩLM that contains a learner’s declarative memory experiences derived from joint and cohort academic, communicative, and social engagement. LTM ERPs as seen in Figure 11 are used to assist students with recall of academic subject matter as they relate to advanced knowledge acquisition.

DALI and the ΩLM Integration

Every student’s initial grouping data, dynamic regrouping datasets, and every DALI suggestion and recommendation and related responses are passed through the functions of the KAS as previously described, the results of which are stored in each student’s ΩLM. However, DALI subsumes many of these functions; the Working Memory (w), STM (m), and the Procedural Memory Modules, and the ΩLM subsumes the function of the Declarative (LTM) Memory Module outlined within the original KAS in Figure 1. Figure 12 outlines the functions and processes of DALI and ΩLM integration.

Conclusions and Future Work

The ΩLM is a unique system and methodology that mimics human memory models to write and retrieve student learning datasets available from ASL. DALI’s DNLN AI models parses these immense datasets utilizing artificial cognitive memory models that includes Working Memory and a STM model that includes a unique ML trained entropy function to decipher, identify, tag, index, and store subject and non-subject communication and social data. Moreover, DALI stores relevant, important, and critical singular learning and social cohort datasets in the appropriate ΩLM Declarative Memory for later retrieval. Further, the ΩLM stored datasets, singular and cohorts, provide DALI the sources for dynamic regrouping of learners into a more conducive academic environment, corrective academic and social suggestions and recommendations, as well as episodic memory information for the academic context recall assistance ERP apparatus. After the system has been developed and implemented into an online platform, it must be tested to verify its efficacy and ability to increase student academic achievement. Its basis in human memory models will hopefully allow it to store information and function similarly to the human brain and as effectively, but it may have to be modified as new research on human declarative memory arises. The current mathematical models for specific modules may also have to be altered in the future to allow for more accurate retrieval, storage, and interpretation of important data. The ERP apparatus specifically will be tested soon to validate its capability in providing appropriate stimuli and aiding students with long term recall.

Although much research still needs to be conducted to prove the efficacy of the hypotheses and theories of this learning engineering invention, there’s more than enough scholarly literature derived from classroom and residential student examinations and studies that substantiates assumptions and preliminary evidence that DALI and the ΩLM may transform the learning experiences for students in the near future. DALI’s learning engineering solutions, employed in a digital software platform, offers a fresh approach to online education and provides several key solutions to long-standing legacy learning management system (LMS) shortfalls. The use of deep machine-learning AI models to provide academic support structures to each individual learner and index and store all the results in an artificial cognitive learning map is unique, and holds promise for future iterations of the innovations to solve some of the most pressing and common extra-curricular issues that have negatively affected academic performance of the online learner in the past.

References

[1] Atkinson, R. C., & Shiffrin, R. M. (1965). Mathematical models for memory and learning. Institute for Mathematical Studies in the Social Sciences, Stanford University.

[2] Baddeley, A. D., & Hitch, G. (1974). Working memory. Psychology of learning and motivation, 8, 47-89.

[3] Baddeley, A. D. (1999). Essentials of human memory. Psychology Press.

[4] Bengio, Y., Ducharme, R., Vincent, P., Jauvin, C. (2003). A Neural Probabilistic Language Model. Journal of Machine Learning Research 3, 1137–1155.

[5] Bishop, C. M. (2006). Pattern recognition and machine learning. springer.

[6] Bradley, M. M., Codispoti, M., Cuthbert, B. N., & Lang, P. J. (2001). Emotion and motivation I: Defensive and appetitive reactions in picture processing. Emotion, 1(3), 276-298. http://dx.doi.org/10.1037/1528-3542.1.3.276

[7] Butler, A. C., Marsh, E. J., Goode, M. K., & Roediger, H. L. (2006). When additional multiple-choice lures aid versus hinder later memory. Applied Cognitive Psychology, 20(7), 941–956. https://doi.org/10.1002/acp.1239

[8] Coltheart, M. (1980). Iconic memory and visible persistence. Perception & Psychophysics, 27(3), 183–228. https://doi.org/10.3758/BF03204258

[9] Conway, M. A. (2009). Episodic memories. Neuropsychologia, 47(11), 2305–2313. https://doi.org/10.1016/j.neuropsychologia.2009.02.003

[10] Eichenbaum, H. (2000). A cortical-hippocampal system for declarative memory. Nature reviews. Neuroscience, 1(1), 41-50. doi:10.1038/3503621320

[11] Engle, R. W., Tuholski, S. W., Laughlin, J. E., & Conway, A. R. (1999). Working memory, short-term memory, and general fluid intelligence: a latent-variable approach. Journal of experimental psychology: General, 128(3), 309.

[12] Gabrieli, J. D. (1998). Cognitive neuroscience of human memory. Annual review of psychology, 49(1), 87-115.

[13] Gathercole, S. E. (1999). Cognitive approaches to the development of short-term memory. Trends in cognitive sciences, 3(11), 410-419.

[14] Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural computation, 9(8), 1735-1780.

[15] James, W. (1890). The principles of psychology. New York: H. Holt and Company.

[16] Jones, G. V. (1976). A fragmentation hypothesis of memory: Cued recall of pictures and of sequential position. Journal of Experimental Psychology: General, 105(3), 277-293. http://dx.doi.org/10.1037/0096-3445.105.3.277

[17] Kensinger, E. A., & Corkin, S. (2003). Memory enhancement for emotional words: Are emotional words more vividly remembered than neutral words? Memory & Cognition, 31(8), 1169–1180. https://doi.org/10.3758/BF03195800

[18] Kesner, R. P., & Rogers, J. (2004). An analysis of independence and interactions of brain substrates that subserve multiple attributes, memory systems, and underlying processes. Neurobiology of learning and memory, 82(3), 199-215.

[19] Massaro, D. W., & Loftus, G. R. (1996). Sensory and perceptual storage. Memory, 1996, 68-96.

[20] Mentor, Competition Entrant, Team Member, Competition Entrant, Team Member (2017). Deep Learning Intelligence System and Interfaces. U.S. PTO Patent Application Serial Number/Provisional 6246175721

[21] Newell, A. (1990). The William James lectures, 1987. Unified theories of cognition.

[22] Ross, B. H., & Bower, G. H. (1981). Comparisons of models of associative recall. Memory & Cognition, 9(1), 1–16. https://doi.org/10.3758/BF03196946

[23] Sandeen, A. (2004). Educating the whole student: The growing academic importance of student affairs. Change: The Magazine of Higher Learning, 36(3), 28-33.

[24] Shannon, C. E. (2001). A mathematical theory of communication. ACM SIGMOBILE Mobile Computing and Communications Review, 5(1), 3-55.

[25] Squire, L. R. (2004). Memory systems of the brain: a brief history and current perspective. Neurobiology of learning and memory, 82(3), 171-177.

[26] Tulving, E. (2002). Episodic memory: from mind to brain. Annual review of psychology, 53(1), 1-25.

[27] Vapnik, V., (1998). Statistical learning theory (Vol. 1). New York: Wiley.

[28] Wagner, A. D., Schacter, D. L., Rotte, M., Koutstaal, W., Maril, A., Dale, A. M., ... & Buckner, R. L. (1998). Building memories: remembering and forgetting of verbal experiences as predicted by brain activity. Science, 281(5380), 1188-1191.

[29] Wang, Y., “On cognitive mechanisms of the eyes: The sensor vs. the browser of the brain, keynote speech,” in Proc. 2nd IEEE Int. Conf. Cognitive Informatics (ICCI’03), London, U.K., Aug. 2003, p. 225.

[30] Wang, Y., Wang, Y., Patel, S., and Patel, D., “A layered reference model of the brain (LRMB),” IEEE Trans. Syst., Man, Cybern. C, Appl. Rev., vol. 36, no. 2, pp. 124–133, Mar. 2006.